In a recent LIGO-Virgo-KAGRA (LVK) publication, we present new measurements of the Hubble constant (H₀) and constraints on possible deviations from Einstein’s theory of general relativity at cosmological scales. We use data from 76 newly detected gravitational-wave events observed in the first part of the fourth LVK observing run, adding to previously available catalogs, which together make the new GWTC-4.0 Gravitational-Wave Transient Catalog. By analyzing gravitational-wave signals from 142 sources, we directly measure the luminosity distance to each binary and the redshifted masses of its two compact objects. Using features in the mass distribution of black holes and neutron stars, and the distribution of potential host galaxies from the galaxy catalog GLADE+ within each gravitational wave’s localization volume, we obtain statistical information on the source’s redshift. Combining these distance and redshift estimates provides independent constraints on the Hubble constant, free from the traditional cosmic distance ladder. Additionally, we place new limits on modifications to gravitational-wave propagation that could signal physics beyond general relativity. These results demonstrate the growing power of gravitational-wave cosmology to independently measure the expansion rate of the universe and test the fundamental laws that govern it.

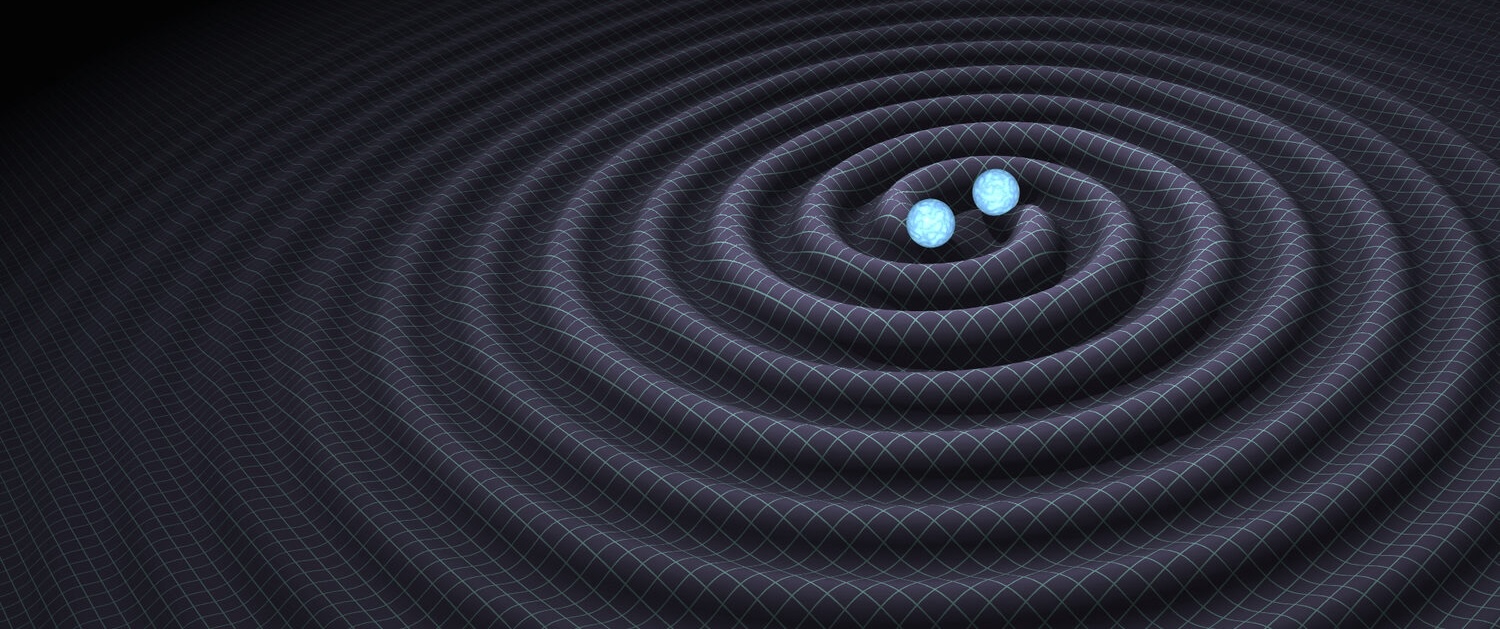

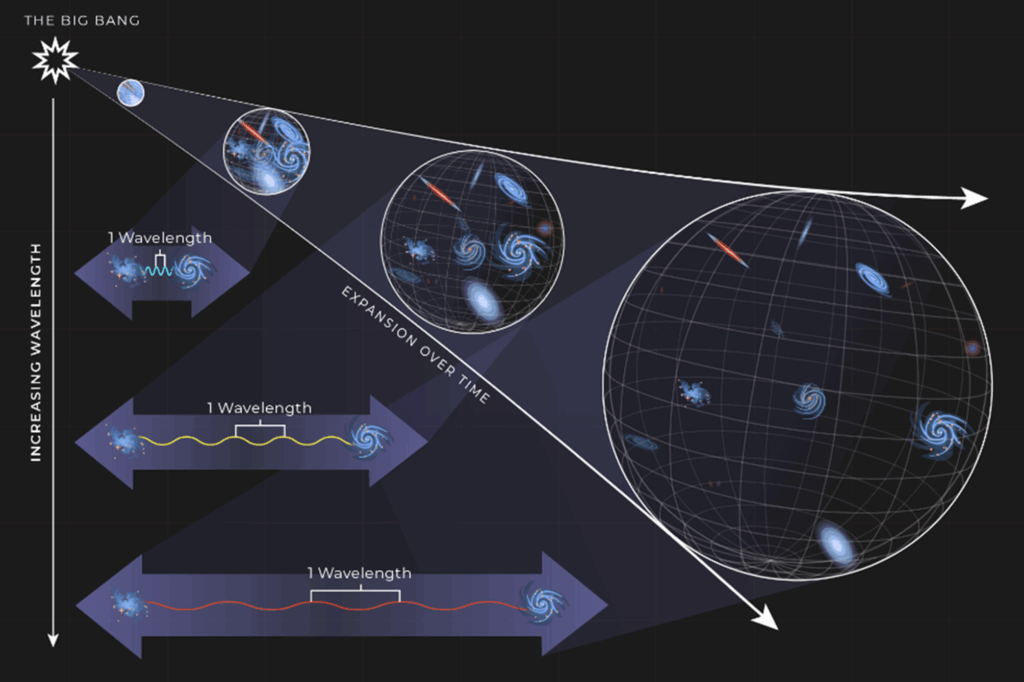

Figure 1: Schematic illustrating cosmic expansion and the cosmological redshift. As the universe expands, galaxies are carried further apart from each other, analogous to dots on the surface of an inflating balloon, and the light from distant galaxies appears stretched to longer, redder wavelengths. This redshift also affects gravitational waves emitted by distant sources. [Credit: NASA Scientific Visualization Studio]

Standard sirens, cosmic expansion, and dark energy

In the 1920s, Georges Lemaître and Edwin Hubble made the revolutionary discovery that the universe is expanding (see Figure 1). Since then the study of the universe, known as cosmology, has grown into a rich, independent discipline that aims to explain how the universe formed and evolved using a model defined by just a handful of fundamental parameters. Today, this model is known as ‘Lambda CDM’, and considered the ‘Standard Model’ of cosmology. It is grounded in Albert Einstein’s theory of General Relativity.

Yet, even today, modern cosmology still faces major open questions. One of the biggest puzzles concerns one of the key parameters of the Standard Model of cosmology: the Hubble constant, or H₀. This number (expressed in units of kilometers per second per Megaparsec (Mpc), km s-1 Mpc-1) describes how fast the local universe is expanding — in simple terms, it measures how quickly distant objects are moving away from each other. However, measurements obtained with different and independent methods give different answers for its value. If we look at the afterglow of the Big Bang — the cosmic microwave background, or CMB — we find a value of around 68 km s-1 Mpc-1. But when we measure local distances using nearby exploding stars called type Ia supernovae and Cepheid variable stars, we get about 74 km s-1 Mpc-1. Today, the gap between these two values can’t be explained by measurement errors alone. This so-called `Hubble tension’ has become one of the most pressing puzzles in modern cosmology.

Meanwhile, another mystery looms: the universe’s expansion is accelerating, as first assessed by measurements from type Ia supernovae in 1998. This speed-up of the cosmic expansion rate requires us to add a new component to the universe, called dark energy and understanding the true nature of dark energy is one of the great quests of modern cosmology. Galaxy surveys carried out with large ground-based or space-based telescopes, e.g. DESI and Euclid, are currently mapping the distribution of galaxies across the cosmos with exquisite precision. This will enable us to test whether the distribution of galaxies can be fully explained by Einstein’s theory — or whether something new is at play on the largest scales of the universe.

In this context, gravitational waves (GWs) have recently made their appearance as a powerful cosmological probe. First predicted by Einstein a century ago, GWs are propagating ripples in spacetime, produced by cataclysmic events in the universe like pairs of black holes or neutron stars spiraling together and merging. According to general relativity, GWs travel freely across the universe at the speed of light, causing tiny shifts in the relative distances between freely falling masses on Earth — an effect first detected in 2015 by the LIGO-Virgo collaboration. Unlike other cosmic distance markers, GWs provide a direct way to measure how far away an event occurred, without relying on the complex “cosmic distance ladder” used for supernovae and Cepheids. Our measurements of distances using GWs rely on accurate computations of the expected form of the signal observed by GW detectors on Earth according to General Relativity. Comparing the observed amplitude and phase of the signal to predictions, we can directly determine the distance of each source. GW sources are therefore called “standard sirens” — a nod to the “standard candles” (objects with known intrinsic brightness, such as type Ia supernovae) that astronomers have used for decades. By combining the distance measured from a standard siren with information about how fast its host galaxy is moving away from us, we can make an independent measurement of the Hubble constant.

But there’s more: GWs may also help test whether Einstein’s theory of gravity holds true at the largest cosmological scales. If new physics — like an unknown dark energy field — is behind the universe’s accelerated expansion, it could change how GWs travel across large cosmic distances, and in particular how their amplitude (their size) changes during propagation. This effect implies that the distance inferred from GWs would differ from the distance inferred from light emitted by the same source, *if* gravity is different from Einstein’s general relativity. Crucially, parameters describing this phenomenon can be constrained using the same methods employed to determine the Hubble constant. This means GWs can be used to target two major open questions in cosmology at once — with an approach that is also independent of other methods historically used to measure cosmic expansion.

In this study, the LVK collaboration has used GW observations not just to refine measurements of the Hubble constant, but also to put constraints on how GWs might behave differently from Einstein’s predictions.

Methods

In contrast to the straightforward measurement of distance, GW signals do not directly yield measurements of the redshift of their source. Just as light from a receding galaxy is stretched to longer (redder) wavelengths, the masses of merging black holes appear larger than they actually are due to the expansion of the universe. This means we cannot distinguish whether we’re seeing a truly heavier object or an object moving away from us faster — at least not from the GW signal alone.

However, some sources are expected to emit an accompanying flash of light — in particular, mergers containing at least one neutron star. When this is detected in coincidence with the GW signal, astronomers can pinpoint the host galaxy and measure its redshift directly. These sources are therefore referred to as “bright sirens”. This was the case for the first binary neutron star merger detected by GW observatories, GW170817, which also produced a bright electromagnetic signal. That signal led to the quick identification of its host galaxy (NGC 4993), and combining its redshift with the distance from the GW data provided the first standard siren measurement of the Hubble constant.

Unfortunately, no new binary neutron stars with such coincident electromagnetic signals were found in the first part of the fourth LVK observing run. For all other sources — mainly binary black holes — we rely on statistical methods to estimate the redshift. These methods use some external, or “prior” knowledge of the overall population of sources. Crucially, the observed distribution of source properties differs from the true, underlying population. This happens partly because of the nature of our detectors, which are more likely to pick up certain sources (like more massive or close by ones) than others (lighter or farther away). Moreover, if the expansion history of the universe or the laws of gravity were different — for example, if dark energy had unexpected properties — this would change the relationship between the true and observed populations. Comparing the expected source population properties to the observed ones, and carefully accounting for the different detection probability, or “selection bias”, allows therefore to place constraints on the properties of the universe such as its expansion history.

We use a statistical approach that exploits simultaneously two complementary sources of information on the underlying population properties—globally referred to as the “dark siren” method.

The first source of information relates to the mass distribution of binary black holes, also known as the “spectral siren” method. Suppose that black holes in the universe form preferentially around a specific mass, due to intrinsic astrophysical processes. For example, theory predicts that stellar-mass black holes should have a maximum mass because extremely massive stars explode so violently (through what is known as a pair-instability supernova) that they leave no black hole behind. By comparing the expected maximum mass scale with what we observe — and knowing how cosmic expansion shifts these masses — we can infer the expansion rate. In particular, for the first time in a LVK cosmology publication, we use here a model covering the full range of source masses, which encompasses different types of mergers, namely, binary black holes, binary neutron stars, and systems made of a black hole and a neutron star.

The second source of information comes from the distribution of galaxies—also called the “galaxy catalog” method. Assuming that black holes form in galaxies, we compare the distribution of galaxies in the region where each merger likely happened with large galaxy catalogs to identify possible host galaxies. Because the GW sky maps are imprecise, this host association is probabilistic: there can be hundreds or thousands of potential host galaxies, each with a different likelihood of being the true one. The probability depends on the Hubble constant and dark energy properties, which link distance and redshift, but can also depend on intrinsic galaxy properties, such as their mass or luminosity. We assume that the probability of a galaxy hosting a gravitational-wave merger is proportional to its luminosity in infrared light. We check that this assumption doesn’t affect our final results in any significant way. Importantly, we must also correct for the fact that galaxy surveys are incomplete — some faint or distant galaxies may be missing altogether.

Finally, because our detectors are more sensitive to certain black hole masses and distances, we must carefully correct for these selection effects to avoid bias. This means we need to estimate the black hole population properties and the cosmic expansion history at the same time, while also accounting for the redshift information from the galaxy catalogues. In this work, we make a major step forward compared to earlier LVK analyses, where these pieces of information were combined in separate stages as a trade-off between accuracy and computational cost. Thanks to improvements in our data analysis pipelines, we now perform a full, simultaneous measurement — the statistically correct way to get the most accurate results.

Results

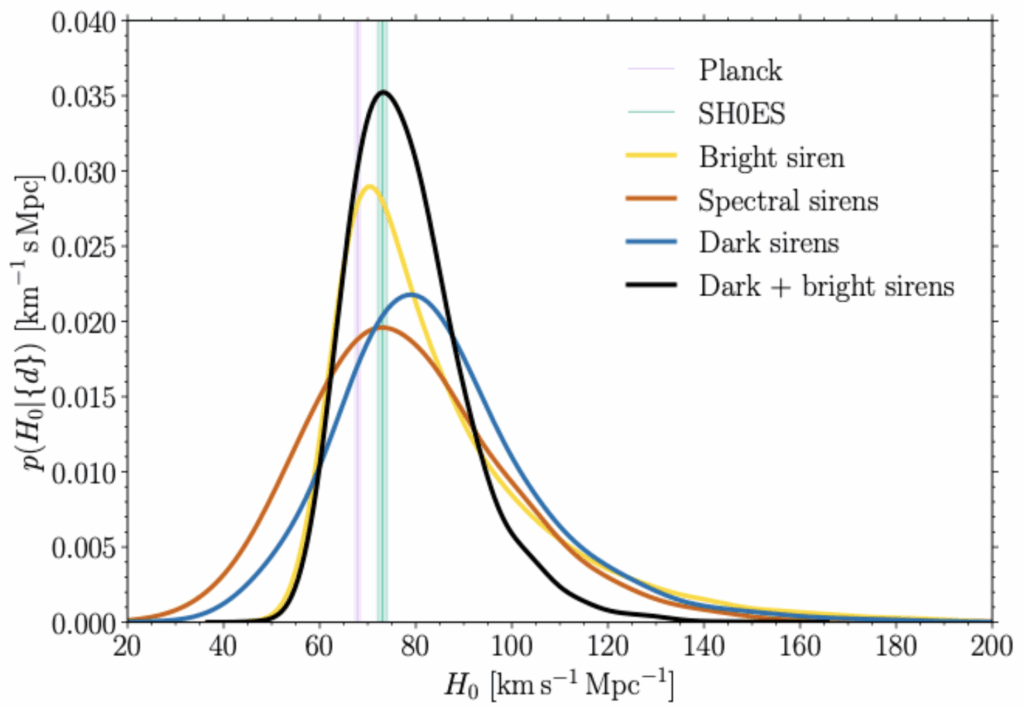

Figure 2 shows our Hubble constant (H0) measurement. The best estimate comes from the combination of the dark siren analysis, with the bright siren result of GW170817. From the position and width of the peak of the curve, we obtain H0 = 76.6+13.0-9.5 km s-1 Mpc-1. Here the subscript and superscript numbers give our error on this measurement at the 68% credible level. Earlier LVK analyses using galaxy catalogs assumed a fixed model for the population of compact objects, which made the constraints on H₀ unrealistically tight. The method used in this work reaches similar precision but is more statistically robust because parameters describing the population of compact objects, such as the position of preferential scales in the mass distribution, are now jointly measured with the cosmological ones.

Figure 2: (Figure 5 from our publication). Posterior probability distributions for the values of the Hubble constant (H0) from different combinations of the full GWTC-4.0 dataset. The best result is given by the black curve, which is a combination of GWTC-4.0 “dark sirens” with the “bright siren” GW170817. For comparison, results with only dark sirens (blue), spectral sirens (orange), and the single bright siren (yellow) are also shown.

Our results indicate that the use of a comprehensive model for the mass distribution of compact binary mergers leads to a 50% improvement over dark siren constraints with models using only binary black hole mergers, despite the inclusion of just five additional events containing at least one neutron star. The estimate from dark sirens alone is still slightly broader than the result obtained from the single bright siren GW170817, which underscores the importance of detecting more multi-messenger sources; however, the measurement on the Hubble constant obtained using spectral sirens constitutes an improvement of 60% compared to previous LVK results.

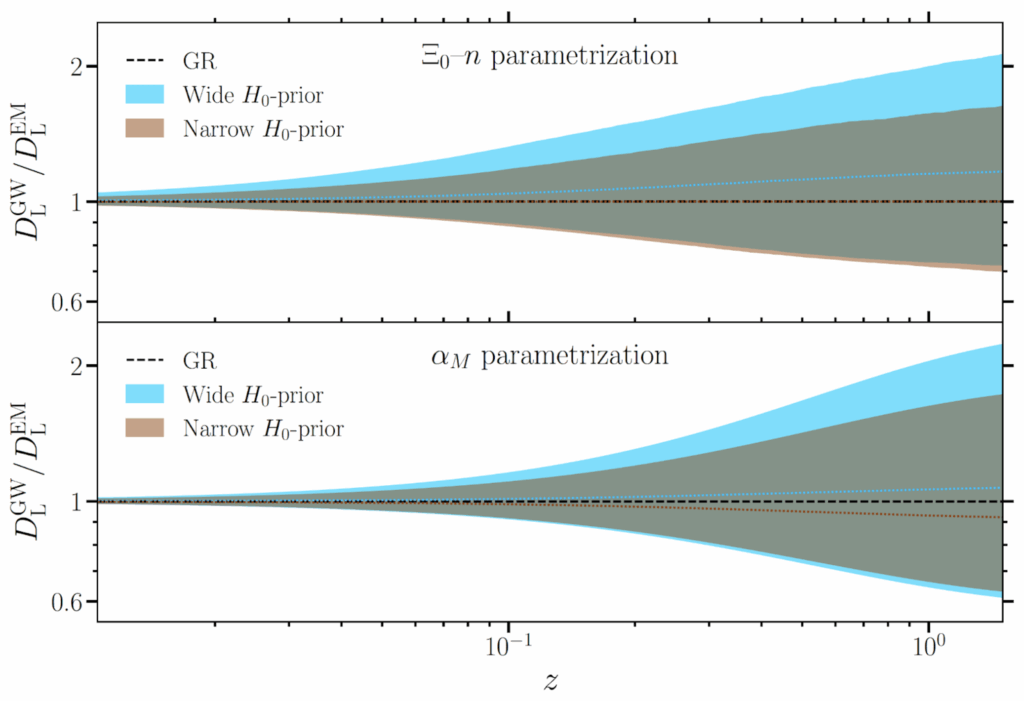

Figure 3 presents our constraints on possible deviations from Einstein’s general relativity in how gravitational waves propagate through an expanding universe. Specifically, we examine the ratio between the distance measured from the gravitational-wave amplitude (labelled DGWL ) and the distance that would be measured from an electromagnetic counterpart (labelled DEML). If general relativity is correct, these two distances should match exactly, so the ratio should equal one at all redshifts (black dashed line). We test this using two different parameterizations of the effect and place limits on the corresponding parameters. The top and bottom panels of the figure show the results for each model, which agree well with each other. The colored bands indicate the 90% credible intervals from GWTC-4.0. We find no evidence for deviations from general relativity, with our best measurement constraining such deviations at the 60% level (68.3% credible interval), improving previous non-LVK constraints by about 40%. The bright siren GW170817 does not contribute significantly here, because this effect builds up during propagation and only sources at non-negligible redshift are informative — GW170817, at redshift z ≈ 0.01, is simply too close.

Figure 3: (Figure 10 from our publication). Ratio of the distance measured from gravitational-wave sources to the distance that would be measured from an electromagnetic emission from the same source, as a function of the source redshift. If the theory of general relativity holds, the two are expected to coincide, so that the ratio would be identically equal to one at all redshifts. Colored bands show the constraint obtained from GWTC-4.0 at 90% credible level. We find no evidence for deviations from general relativity. The upper and lower panel refer to two different parametric forms adopted to describe this effect, which we find to give consistent results.

Finally, the GWTC-4.0 data do not place any constraints either on the dark matter content of the universe or on the dark energy equation of state. The most impactful effect of dark energy instead manifests through modified gravitational-wave propagation.

Summary and future prospects

The latest results from the LIGO-Virgo-KAGRA collaboration provide a new, independent measurement of the Hubble constant, and updated constraints on possible deviations from Einstein’s general relativity at cosmological scales. The best estimate of the Hubble constant, combining statistical “dark sirens” data with the single known bright siren GW170817, is 76.6+13.0-9.5 km s-1 Mpc-1 — consistent with previous gravitational-wave-based measurements but obtained with improved statistical treatment. The analysis shows that including a more complete range of source types leads to significantly tighter constraints than using only binary black holes, even though no new bright sirens were found during this observing run.

The presence of distinct features in the black hole mass spectrum — preferential mass scales where black holes tend to form — is currently the main source of cosmological information for events without an electromagnetic counterpart. The additional information from host galaxy catalogs improves the measurement by only about 8.6%, mainly because of the limited completeness of the GLADE+ catalog used here, and the fairly poor sky localization of events detected so far in the fourth observing run. Both of these factors are expected to improve soon: the Virgo detector’s increased sensitivity in the remaining part of the fourth observing run should lead to better-localized events, and a more complete catalog, UpGLADE, is in preparation.

Our study also tests whether gravitational waves might propagate differently from the prediction of general relativity across cosmic distances, a key probe of ideas related to dark energy. No deviation from general relativity is found, with constraints now about 40% tighter than previous non-LVK gravitational wave limits.

These results show that, with more data on the way and robust statistical methods now in place, gravitational-wave cosmology is well-positioned to tackle some of the biggest open questions in modern physics.

Find out more:

- Visit our websites:

- Read a free preprint of the full scientific article here or on arxiv.

- Gravitational-Wave Open Science Centre data release for GWTC-4.0 available here.

Back to the overview of science summaries.